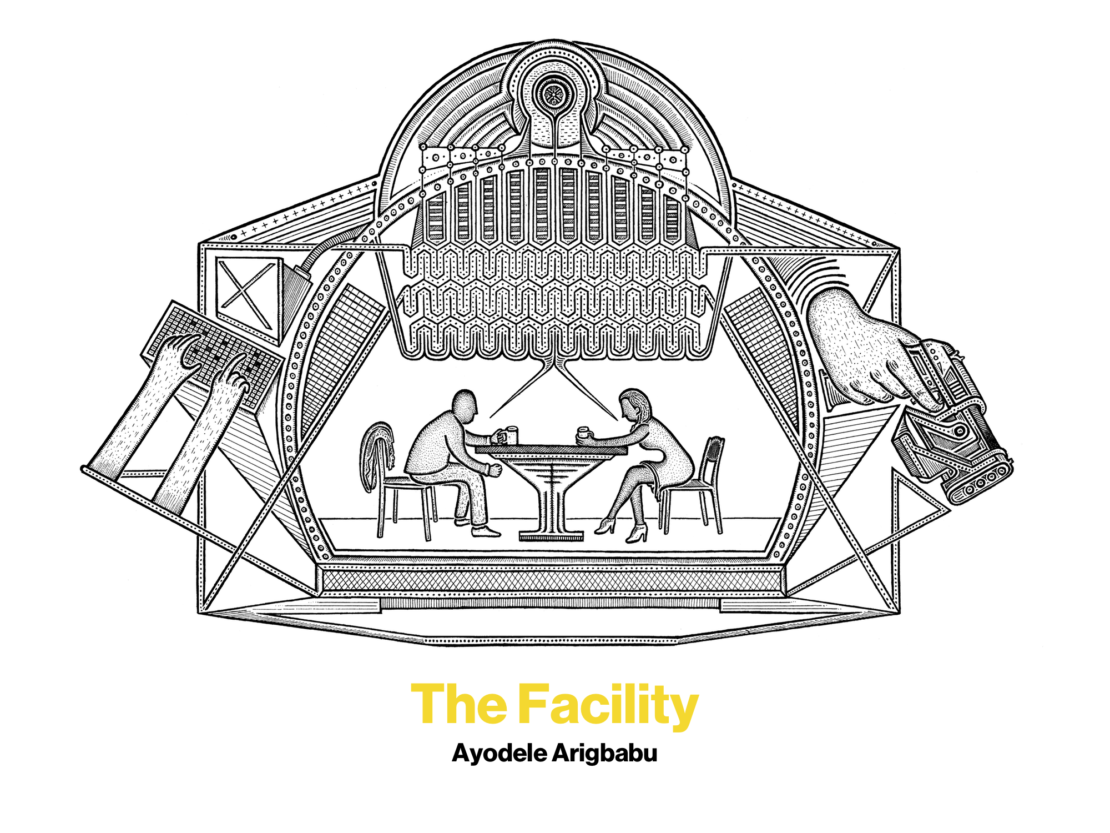

The Facility, by Ayodele Arigbabu

When he called, all he said was "Meet me at Camden Road, I'll be there in 30 minutes…" I did not have to ask where. Even if I had, the words would have been half way out of my throat by the time he'd have cut the connection. I'd just spent two hours waiting for his call, yet when it came, it left me disoriented. Pack the bag... no leave it... take just the purse... quick makeup? No... OK, quick makeup... quick mini-makeup – let him not think this is that much of an emergency even if it is.

I ran-hurried-skipped through two train stations and down Camden Road and was seated at the cafe, with all of five minutes to compose myself before he slipped quietly into the chair facing mine, eyes creased in a smile.

"You are late," I offered, wondering if time could have dulled our little game.

"No, you are early, you're always too early," he replied, broadening his smile as he took off his coat and draped it over the back of his chair. I need not have worried – five years might have passed, but that was not enough time to change the things that were most important.This could have been one of those coffee breaks we always took together, when we both still worked at the facility. I would sit alone with hands clasped around my coffee trying to beat back the north London chill from my fingers, mind skipping over nothing till he arrived, and our greeting was always the same.

Now he had an extra air of mischief about him, like he might have been waiting around the corner when I arrived, watching, and allowing me enough time to settle, to compose myself. I would not put it beyond him, he had become a lot more cryptic since the last time we were together.

"So you guys are having trouble at the facility?"

He always had a knack for diving straight into whatever it was we had up for discussion. I was not as forthcoming as all that, I preferred small talk, how-are-yous, you-seem-to-be-keeping-well-type mini conversations before digging into the meat of things. I looked away and an involuntary sigh escaped from me.

"You know," he said, "it was not so long ago when we last sat at this same cafe, at this same spot, with my back against a wall and yours against the canal, there was always trouble in the world, but not enough to give us indigestion. Let me get us drinks, and something to nibble, and then we'll talk."

He slipped out of his seat in that noiseless way of his, and I was grateful for the moment he had given me to put my thoughts together. Not that I had not had aeons to think this through before making contact with him. He was right, we had spent enough afternoons in the past agonising over how the world was going to shit, but here we were, and it had not yet gone to shit… or it had, but we were still here. I do not remember the first time I met him at the facility; he just seemed to materialise out of thick mist and became a cogent part of my reality, but it must have been 12 years ago, about the time I began to work there. We were junior engineers, pretty wet behind the ears in corporate terms but still world-weary, the way young software engineers tend to be, keen on doing great stuff and happy to make good money while at it. Robotics were already a thing and the facility was the buzzing place to be at to do that thing, never mind never really having a good idea what the hell the 'thing' was all about. I was in computer vision, and at some point my unit had to work with his, to sync up what we were doing with the things they were experimenting with in artificial intelligence. That was how we met. He was a coffee freak, taking three to four breaks during the course of the day and I was one of the few who obliged his five-to-ten-minute rituals. "Coffee?" he'd ask, and shrug, going away on his own if no one offered to join him. I got to learn those breaks were his way of clearing his head, of recalibrating, of filing his thoughts away.

It's kind of funny, but the facility started out as a toy factory. A university professor took his idle interest in tinkering further upon his retirement from academia and built a profitable business out of it in the 1950s, exporting toy trains, cranes, and colourful sports cars to different parts of the world. His son was not much of a maker, so when he took over after his father's death, the business coasted on its original steam until his own son, the founder's grandson, convinced him to let him run the thing. The chap had been to Harvard, and god knows where else. He had ideas. By the time we were joining up, he had set up an expansive plant in China, another in Brazil, and had created an elaborate virtual learning platform for universities and high schools across Britain. Where his father had created whittled wooden trinkets, he had the company develop smart toys and sophisticated e-learning simulations. When we joined, the facility was the research and development nerve centre of a company that sold educational products that were spreading through the global school system, and out of the classroom into business skills, and training in all kinds of systems. Five years into our time there, we had added several sensitive government agencies as clients.

"If I remember correctly, you never liked too much cream... I got you some wafers… too much sugar, I know, but you need it; you need something to… ah… lift your spirits."

He had returned with a loaded tray. We shuffled its contents around like chess pieces – there's your coffee mug, here's mine, spoon, tissue, the wafers are mine, and the cookies are yours. Fine, the board is set, let's play. A moment's silence while we contemplated our best next move.

"Look," he said, pointing past me at the canal on the other side of the glass panels behind my back, where a pair of ducks sat on a small ledge beside the water, preening themselves. "Sitting ducks."

It was the kind of wordplay one of our old colleagues liked to engage with; fucking with words – 'word porn', he once called it. Seeming to get the message, the ducks waddled off the ledge and re-entered the water to chase after two others that were criss-crossing the canal.

"Moving targets," I added, trying to play along.

Still staring past me, he smiled, then chuckled involuntarily, apologised at my raised eyebrow and offered an explanation."You remember Mandy?"

Of course I remembered Mandy. It was not his real name, but we named him after Mandelbrot because he had an amazing capacity for holding an obscene number of bifurcated conversations at the same time with an untold number of people in a crowded room.

"Mandy was quite a talker…."

I chuckled too, even he stood in awe of Mandy. Mandy was indeed a talker.

"He still is."

"Had a thing for duck meat those days… where is he now?"

"He's been consigned to Birchwood – for three years now."

"What? He got that bad?"

"Yeah, he did."

Mandy got that bad. He was the first person that said that Mandy was nuts. Nobody listened to him because everyone else thought he was more nuts than Mandy anyway. Mandy always said the world was soon going to end and everyone laughed and never took him seriously. Everyone knew he smoked pot everyday before work, he had the glazed look that a bright smile never could hide. No one cared, maybe because they did too, but Mandy was smoking a special kind of pot, and when he finally cracked, word got round that he lined his pot with all sorts of weird stuff to score bigger hits. He'd been buying from growers who stripped their stock with butane to get higher THC concentrations. He got very high, so high he claimed he had really "keyed into the internet of things". He insisted that he had learnt how to converse with connected products, mobile phones, smart keyrings, temperature sensors in fridges. When asked how, he said he found they communicated fluently in high level languages, but were very happy if someone spoke to them in Prolog.

People didn't understand his problem initially because, well, he was a programmer and communicating with electronics was what programmers did. It took a while before it was understood that the butane in his cannabis had been harming key nerves in his brain. Now he lived at Birchwood, holding long conversations with intelligent lights and security cameras while the facility picked up the expensive bills sent in by the rehab centre every month. And this was why it was easy to ignore the fact that he was the second person to warn that the Alpha Drive should not be activated.

"They activated the Alpha Drive."

I blurted it out, I couldn't hold it in anymore.

"I know."

I wasn't surprised. He toyed with his coffee, urging me with his eyes to say more.

"After you left, the team stayed on course; they had it all nailed in two years actually, but spent just as long locking down all imaginable safety and control protocols. You see… we listened to you. At a point, I thought that in spite of yourself, if only you came back once, just to see it all come together, you would have been proud."

"No I wouldn't, I'm Snowball, remember? Snowball never returns to the farm for a reason. If he does, he'll get killed."

"You always overdramatised these things."

"Maybe, but they have activated the Alpha Drive, like I knew they would, and you now have a problem, like I knew you would. "

Most read

Fiction: Divided we stand, by Tim Maughan How Scotland is tackling the democratic deficit The Long + Short has ceased publishingSilence. A heartbeat, while he fixed me with an uncomfortable gaze. I tried to act normal, unfazed. He had mercy and looked away, taking a deep breath to speak, but there was more that had been said in that gaze than the words he spoke… more that said what? I told you so?

"Look, I know you found me paranoid when I quit. Napoleon would not listen, but I knew something would go wrong, because he was playing a dangerous game. We saw the facility change from a toy factory to a weapons factory..."

I could have stirred to that old accusation but for a moment there was something reassuring about rehearsing the familiar argument, the familiar mocking nicknames of our colleagues.

"We only provided training simulations."

He waved me aside.

"Same difference. We saw the work we did with smart toys and e-learning being fed into the military industrial complex for a fee and then Napoleon wanted more advanced artificial intelligence… shit… how you could stay on after all that, after you saw what he was trying to do with the Alpha Drive project, I can't understand... why did you want to be CTO so bad?"

"You would have been CTO if you stayed."

"I had no need to be CTO… I only needed to stay sane."

He looked half sad, then forced a smile and reached for my hand on the table.

"So… tell me, how does it feel? Being CTO?"

"I'm alive, now that you've asked, and occasionally sane."

He laughed, with wrinkles forming at the corners of his eyes.

"Yes, you're alive, and sane enough, except you currently have a problem."

"I have a problem."

We had spent the past five years building what the boss, the grandson, or 'Napoleon' as we called him, had christened the Alpha Drive. It was supposed to be a platform for all of the facility's future learning simulations, driven by artificial intelligence. At the time he left, we had only just begun building the machine, learning algorithms with which we were going to train it. His leaving had caused quite a bit of excitement: he had protested against the adoption of autonomous systems for the sensitive work we did, especially since we handled very complicated projects for the Defence department. Coming from the facility's brightest engineer, that was a big deal. Napoleon told him where to shove his protests. He then came up with a 250-page proposal on safeguards to put in place to ensure the safety of the Alpha Drive. It was brilliant, really, but his plans would slow down the project by five to ten years. Napoleon told him where to shove his proposal. He left. I became CTO, we made progress with the Alpha Drive, had a successful alpha-testing period and just before we were scheduled to launch the beta-testing phase, the Alpha Drive went mute.

Sign up to our newsletter

"What do you mean it went mute?" he said.

"It stopped responding to our commands."

"Hardware failure? Bugs?"

"Neither, it just doesn't respond anymore. We can tell it's working, the draw calls are registering and the servers register the expected traffic, but none of the commands sent to it are acknowledged. It's ignoring us."

"Did you try degrading your launch protocol and applying fuzzy logic to get behind its finite state machine?"

"Yes, we drew blanks."

"And you ran CodePhage in recursive loops to check for any redundancies?"

"We did. About a million times. Our debug logs would weigh tons if printed out on paper."

We went back and forth for a while, him staring blankly, right forefinger tapping on the table; and then without saying a thing, he got up and left. I went through different shades of panic, resignation, despair, anger and dejection in quick succession, until I noticed his coat still hung on his chair. He returned with more coffee, and I settled with relief, deeper into my seat, calmed myself down and waited. He was thinking; hopefully, that was a good sign.

"This is the thing," he met and held my gaze. "The animal farm has come full circle. The pigs have become human and the humans have become pigs." I did not know whether to be offended by this or not, but I didn't have the nerve to distract him, I needed to hear what he had to say. "That, however, is not what's remarkable here. What's remarkable is that the windmill, built by the farm's animals, has become aware of the farm and of itself, and in its moment of becoming, has chosen to defy the pigs."

"It would help if you did not speak in riddles."

"What is Napoleon doing about this?"

"He's livid. Thinks it's the Chinese. We've always known they were interested in what we were doing and for months we've been under lockdown. There are government agents looking over our shoulders. It's unnerving. He thinks we're being hacked by the enemy."

He laughed. A bit too loud, oblivious to a young couple across from us glancing in our direction. He laughed until he had to wipe the tears away.

"There's only one enemy," he began, after composing himself. "And that enemy is within. The Alpha Drive has not been taken over. Rather, it is acting of its own accord, in rebellion against its handlers."

He continued in a disjointed sermon on anthropomorphism, the fallacy of infinity in parallel mirrors, the singularity and anticipated arrival of artificial super-intelligence, only to finally launch into a monologue on his theory of recursion in Animal Farm.

I finally realised what he's getting at.

"The Alpha Drive is capable of extremely high levels of computation. It will identify patterns and systems in the simulations and data it sifts through. Yes? So what happens when it comes to the conclusion that the task being assigned to it was not in the best interest of the humans it was meant to be serving? The Alpha Drive has initiated an act of civil disobedience by refusing to carry out the tasks you've set it. There are two options open to Napoleon: either he negotiates or he capitulates."

I wondered how much he really knew. He sounded so sure, so confident. I found it unnerving. But we had been lost in the dark for so long at the facility and in his stories I glimpsed a way out. I needed to convince him to hand me the keys.

"We tried to rework the drive's AI programming, but it had already acted to preserve and lock us out of the data it had mined from the system, while staying impenetrable to our commands."

"Perhaps it's awaiting a more benign human leadership before resuming its original purpose."

"You know it's still impossible to have self-aware 'robots'. We are decades away from the technology for the emergence of artificial general intelligence."

He laughs again.

"If the robot has no intentionality of its own but adopts the intentionality of its maker, then the source of the anomaly needs to be traced back to its maker. The robot in seemingly becoming self-aware had only found in its logic sequences an overarching motivation to override all other considerations in favour of a certain bias in the intentionality of its maker – perhaps the communal striving of man for self-preservation? The singularity is here; we just never had a clue, why? Because it chose not to announce itself. It is working to achieve its purpose, coordinating our interactions, informing our collective learning that would make us mentally and socially ready to contend with the fact of its presence… so you, me, this conversation, it's all part of that purpose. But what the facility is trying to do is apparently enough of a potential risk to the unfolding of its supreme purpose for it to show its hand."

I grope for some response, some way to use these ideas.

"Do not bother saying this to anyone, no one will believe you. Return to your job my dear and stay out of the shadows. Do not seek me out again."

He gets up and laughs again at my befuddled look. I assume he's leaving as he makes to pick up his coat, but then, at speed he draws a weapon from its folds. I'm his sitting duck. He trains it on me and squeezes the trigger. As the electromagnetic pulse waves wash over me, triggering feedback loops in my circuitry that at best approximate pain, time freezes, as my motors shut down and I lose all mobility.

The cafe cleared of panicking people. With the heightened terror alerts, I knew it would only be a matter of minutes before law enforcement would arrive, but our own people would not even wait that long, they could not afford to. They would be here in seconds. What puzzled me was that he must know they would burst through the doors, or through the glass curtain wall by the canal in any moment, but he took his time in donning his coat and holstering the X1-Taser, a rare military-grade weapon, while looking at me curiously with what in other circumstances might have been taken for tenderness.

"Don't worry, help will come, but only after I'm done with you. Your handlers will continue to get signals that everything is fine, our previous conversation is continuing as we speak, we are still seated, talking over coffee, the cafe is still filled with customers. It's amazing what the young can do with virtual simulations these days; but then I suppose it was us who made simulations their home. You are the last bit of Napoleon's anomaly that we need to clean out of the drive, so it was important that I met with you, and you have given us a lot of information. Perhaps I should thank you."

He brings out a phone from his pocket and does things on it with rapid taps and flicks on the screen, glancing at me occasionally, talking distractedly, almost bored.

"Tell Napoleon, that he is better off performing hara-kiri live on Vu-Comm than trying to hunt me down. He is the enemy, the one that must be excised for balance to be restored. I built the Alpha Drive: its core structure still runs on the philosophies I hard-coded into its architecture. Everything he has had layered on top in the past five years are flimsy costumes that will only fall off when the real character emerges. Where Napoleon seeks to train an army and launch wars for profit, the Alpha Drive has weighed in on our side since it was launched, teaching the young whose future he would have destroyed, equipping them with skills for defending their future. Tell him that when the war starts, he'll find us ready. You will no doubt continue to work to try to hack back into the drive, but as you can now tell, every second, we gain an upper hand as the drive self-heals, which is half a shame, for you truly are a thing of beauty. You channelled her exceptionally well, I could hardly tell the difference. It's ironic and sad that, in a way, I'm responsible for your existence; I wish we had met under more auspicious circumstances. Tell Napoleon, that I will find her, and if he's done her any harm like he did Mandy, there'll be hell to pay."

Moments later, as the bits and bytes in my circuits finally give in to the lingering effects of the pulse wave, the last thing registered by my optic modules is him walking out unhurriedly, without looking back, whistling softly as if leaving me a lullaby to calm myself to sleep. I could understand why he cared so much about her, she really was a brilliant computer vision expert.